Authors:

(1) Clement Lhos, Legs and Walking Lab of Shirley Ryan AbilityLab, Chicago, IL, USA;

(2) Emek Barıs¸ Kuc¸uktabak, Legs and Walking Lab of Shirley Ryan AbilityLab, Chicago, IL, USA and Center for Robotics and Biosystems, Northwestern University, Evanston, IL, USA;

(3) Lorenzo Vianello, Legs and Walking Lab of Shirley Ryan AbilityLab, Chicago, IL, USA;

(4) Lorenzo Amato, Legs and Walking Lab of Shirley Ryan AbilityLab, Chicago, IL, USA and The Biorobotics Institute, Scuola Superiore Sant’Anna, 56025 Pontedera, Italy and Department of Excellence in Robotics & AI, Scuola Superiore Sant’Anna, 56127 Pisa, Italy;

(5) Matthew R. Short, Legs and Walking Lab of Shirley Ryan AbilityLab, Chicago, IL, USA and Department of Biomedical Engineering, Northwestern University, Evanston, IL, USA;

(6) Kevin Lynch2, Center for Robotics and Biosystems, Northwestern University, Evanston, IL, USA;

(7) Jose L. Pons, Legs and Walking Lab of Shirley Ryan AbilityLab, Chicago, IL, USA, Center for Robotics and Biosystems, Northwestern University, Evanston, IL, USA and Department of Biomedical Engineering, Northwestern University, Evanston, IL, USA.

Table of Links

V. Conclusion, Acknowledgment, and References

Abstract

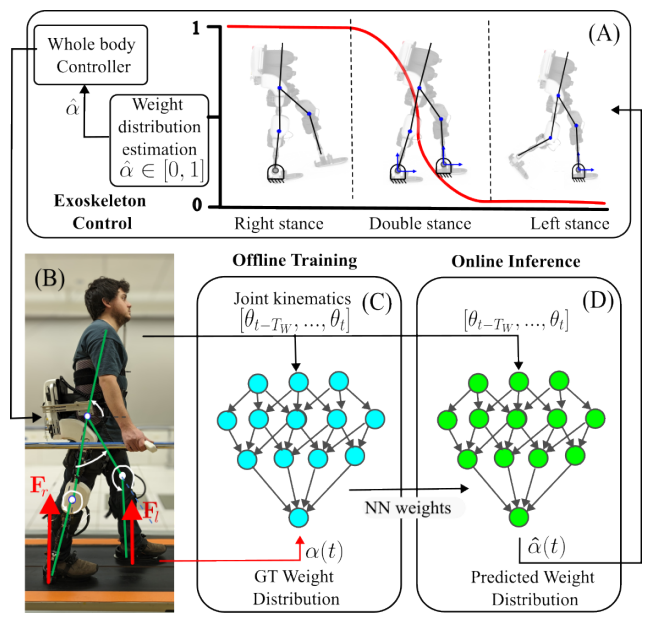

In the control of lower-limb exoskeletons with feet, the phase in the gait cycle can be identified by monitoring the weight distribution at the feet. This phase information can be used in the exoskeleton’s controller to compensate the dynamics of the exoskeleton and to assign impedance parameters. Typically the weight distribution is calculated using data from sensors such as treadmill force plates or insole force sensors. However, these solutions increase both the setup complexity and cost. For this reason, we propose a deep-learning approach that uses a short time window of joint kinematics to predict the weight distribution of an exoskeleton in real time. The model was trained on treadmill walking data from six users wearing a four-degree-of-freedom exoskeleton and tested in real time on three different users wearing the same device. This test set includes two users not present in the training set to demonstrate the model’s ability to generalize across individuals. Results show that the proposed method is able to fit the actual weight distribution with R 2 = 0.9 and is suitable for real-time control with prediction times less than 1 ms. Experiments in closed-loop exoskeleton control show that deep-learning-based weight distribution estimation can be used to replace force sensors in overground and treadmill walking.

I. INTRODUCTION

Lower-limb exoskeletons are increasingly being utilized in everyday tasks and rehabilitation for individuals with gait impairments. In clinical settings, these exoskeletons are typically classified into two main types: partial assistance and full mobilization. Full mobilization exoskeletons are designed for patients with severe motor control disorders, providing autonomous movement of the legs regardless of the patient’s input [1]. Conversely, partial assistance exoskeletons aid in the patient’s movement while still requiring their active input. In this paper, we focus on partial assistance exoskeletons, as they are commonly employed in rehabilitation. Depending on the functional level of the patient, these exoskeletons use techniques like haptic guidance/assistance [2] or error augmentation/resistance [3] to facilitate the (re)learning of walking behaviors. Implementing both haptic assistance and resistance strategies requires precise control over the interaction torques between the user and the exoskeleton, ensuring effective and safe physical interaction.

Typically, the control of the interaction between a user and exoskeleton adopts a hierarchy of control levels: high-level, mid-level, and low-level [1], [4]. For lower-limb exoskeletons, the high-level controller calculates the desired interaction torques tailored to specific ambulatory activities, such as walking on flat terrain, stairs or ramps. The role of the mid-level controller is to estimate the various states within an activity, for example, identifying the gait states (e.g., swing and stance) during walking and setting a desired interaction torque behavior accordingly. Finally, the low-level controller is responsible for compensating the exoskeleton’s dynamics and generating motor commands, based on the desired interaction torque profile identified by the mid-level controller.

In this three-level approach, an accurate, real-time estimation of the gait state in the mid-level and low-level is essential for properly controlling the exoskeleton. A common approach to gait state detection is to use gait events (e.g., heel strike, toe-off) to identify the switching between discrete gait states: left stance, right stance, and double stance [5], [6], [1]. However, achieving a smooth transition between these states is vital to ensure a control input that limits unexpected behaviors for the user. Kuc¸¨ uktabak et al. [7] proposed a ¨ whole-exoskeleton closed-loop compensation (WECC) controller that uses the ratio of ground reaction forces (GRF) (i.e., weight distribution) to approximate the double stance phase as a transition between the left and right stance. The weight distribution, quantified as the stance interpolation factor α, smoothly changes from zero (left stance) to one (right stance) during double stance, as visualized in Fig. 1. Nonetheless, the requirement of additional sensors for GRF measurements, such as treadmill force plates or insoles, increases the complexity and cost of these systems.

Machine learning models have demonstrated their ability to estimate gait states using only joint kinematics of humans walking without exoskeletons, thus eliminating the need for GRF measurements [8], [9], [10]. Motivated by these results, recent studies have proposed real-time estimation of gait states with lower-limb exoskeletons. For instance, Jung et al. [11] and Liu et al. [12] used data from joint encoders and an inertial measurement unit (IMU) positioned on the trunk to classify the gait cycle during walking. These studies divided gait cycles into two (i.e., stance and swing) and eight gait states, respectively. However, discrete control states result in torque or modeling discontinuities. Camardella et al. [13] and Lippi et al. [14] address this issue by implementing a linear regression and a Neural Network (NN) model for regression with joint angles as inputs. The model’s output smoothly transitions between left and right stance, providing a continuous representation of the gait state. Despite this advancement, these approaches present some limitations. First, they only consider the present joint configuration, discarding past information. This approach might lead to inaccuracies in predictions as the weight distribution is influenced not only by the current posture, but also by the velocity and acceleration of the motion. Moreover, few considerations in these studies are given to the generalization across subjects and the prediction time performances. The latter is essential for real-time implementation of the exoskeleton’s whole-body controller.

To address the aforementioned limitations, we present a deep-learning approach for estimating the weight distribution of a user wearing a lower-limb exoskeleton. This approach utilizes a short time window of kinematic data extracted from sensors integrated in the exoskeleton. For the validation of predictions, we compared the proposed approach with the method in [14] which only uses the instantaneous posture. Employing distinct users for training and testing resulted in a user-independent estimation, eliminating the need for a calibration phase. The proposed deep-learning method was trained on walking data from six users, and its closed-loop performance was evaluated on three users (two of which were not included in the training set) during treadmill and overground walking. The results showed that weight distribution can be accurately estimated using kinematic data with an R 2 of 0.9 averaged across users. In addition, this approach was compatible with real-time requirements, achieving a prediction time less than 1 ms.

This paper is available on arxiv under CC BY 4.0 DEED license.