Authors:

(1) Zane Weissman, Worcester Polytechnic Institute Worcester, MA, USA {zweissman@wpi.edu};

(2) Thomas Eisenbarth, University of Lübeck Lübeck, S-H, Germany {thomas.eisenbarth@uni-luebeck.de};

(3) Thore Tiemann, University of Lübeck Lübeck, S-H, Germany {t.tiemann@uni-luebeck.de};

(4) Berk Sunar, Worcester Polytechnic Institute Worcester, MA, USA {sunar@wpi.edu}.

Table of Links

- Abstract and Introduction

- Background

- Threat Models

- Analysis of Firecracker's Containment Systems

- Analysis of Microarchitectural Attack and Defenses in Firecracker Microvms

- Conclusion, Acknowledgments, and References

2. BACKGROUND

2.1 KVM

The Linux kernel-based virtual machine (KVM) [29] provides an abstraction of the hardware-assisted virtualization features like Intel VT-x or AMD-V that are available in modern CPUs. To support near-native execution, a guest mode is added to the Linux kernel in addition to the existing kernel mode and user mode. If in Linux guest mode, KVM causes the hardware to enter the hardware virtualization mode which replicates ring 0 and ring 3 privileges.[1]

With KVM, I/O virtualization is performed mostly in user space by the process that created the VM, referred to as the VMM or hypervisor, in contrast to earlier hypervisors which typically required a separate hypervisor process [41]. A KVM hypervisor provides each VM guest with its own memory region that is separate from the memory region of the process that created the guest. This is true for guests created from kernel space as well as from user space. Each VM maps to a process on the Linux host and each virtual CPU assigned to the guest is a thread in that host process. The VM’s userspace hypervisor process makes system calls to KVM only when privileged execution is required, minimizing context switching and reducing the VM to kernel attack surface. Besides driving performance improvements across all sorts of applications, this design has allowed for the development of lightweight hypervisors that are especially useful for sandboxing individual programs and supporting cloud environments where many VMs are running at the same time.

2.2 Serverless Cloud Computing

An increasingly popular model for cloud computing is serverless computing, in which the CSP manages scalability and availability of the servers that run the user’s code. One implementation of serverless computing is called function-as-a-service (FaaS). In this model, a cloud user defines functions that are called as necessary through the service provider’s application programming interface (API) (hence the name “function-as-a-service”) and the CSP manages resource allocation on the server that executes the user’s function (hence the name “serverless computing”—the user does no server management). Similarly, container-as-a-service (CaaS) computing runs containers, portable runtime packages, on demand. The centralized server management of FaaS and CaaS is economically attractive to both CSPs and users. The CSP can manage its users’ workloads however it pleases, optimize for minimal operating cost, and implement flexible pricing where users pay for the execution time that they use. The user does not need to worry about server infrastructure design or management, and so reduces development costs and outsources maintenance cost to the CSP at a relatively small and predictable rate.

2.3 MicroVMs and AWS Firecracker

FaaS and CaaS providers use a variety of systems to manage running functions and containers. Container systems like Docker, Podman, and LXD provide a convenient and lightweight way to package and run sandboxed applications in any environment. However, compared to the virtual machines used for many more traditional forms of cloud computing, containers offer less isolation and therefore less security. In recent years, major CSPs have introduced microVMs that back traditional containers with lightweight virtualization for extra security. [1, 55] The efficiency of hardware virtualization with KVM and lightweight design of microVMs means that code in virtualized, containerized or container-like systems can run nearly as fast as unvirtualized code and with comparable overhead to a traditional container.

Firecracker [1] is a microVM developed by AWS to isolate each of the AWS Lambda FaaS and AWS Fargate CaaS workloads in a separate VM. It only supports Linux guests on x86 or ARM Linux-KVM hosts and provides a limited number of devices that are available to guest systems. These limitations allow Firecracker to be very light-weight in the size of its code base and in memory overhead for a running VM, as well as very quick to boot or shut down. Additionally, the use of KVM lightens the requirements of Firecracker, since some virtualization functions are handled by kernel system calls and the host OS manages VMs as standard processes. Because of its small code base written in Rust, Firecracker is assumed to be very secure, even though security flaws have been identified in earlier versions (see CVE-2019-18960). Interestingly, the Firecracker white paper declares microarchitectural attacks to be in-scope of its attacker model [1] but lacks a detailed security analysis or special countermeasures against microarchitectural attacks beyond common secure system configuration recommendations for the guest and host kernel. The Firecracker documentation does provide system security recommendations [8] that include a specific list of countermeasures, which we cover in section 2.6.1.

2.4 Meltdown and MDS

In 2018, the Meltdown [32] attack showed that speculatively accessed data could be exfiltrated across security boundaries by encoding it into a cache side-channel. This soon led to a whole class of similar attacks, known as microarchitectural data sampling (MDS), including Fallout [14], Rogue In-flight Data Load (RIDL) [50], TSX Asynchronous Abort (TAA) [50], and Zombieload [46]. These attacks all follow the same general pattern to exploit speculative execution:

(1) The victim runs a program that handles secret data, and the secret data passes through a cache or CPU buffer.

(2) The attacker runs a specifically chosen instruction that will cause the CPU to mistakenly predict that the secret data will be needed. The CPU forwards the secret data to the attacker’s instruction.

(3) The forwarded secret data is used as the index for a memory read to an array that the attacker is authorized to access, causing a particular line of that array to be cached.

(4) The CPU finishes checking the data and decides that the secret data was forwarded incorrectly, and reverts the execution state to before it was forwarded, but the state of the cache is not reverted. (5) The attacker probes all of the array to see which line was cached; the index of that line is the value of the secret data.

The original Meltdown vulnerability targeted cache forwarding and allowed data extraction in this manner from any memory address that was present in the cache. MDS attacks target smaller and more specific buffers in the on-core microarchitecture, and so make up a related but distinct class of attacks that are mitigated in a significantly different way. While Meltdown targets the main memory that is updated relatively infrequently and shared across all cores, threads, and processes, MDS attacks tend to target buffers that are local to cores (though sometimes shared across threads) and updated more frequently during execution.

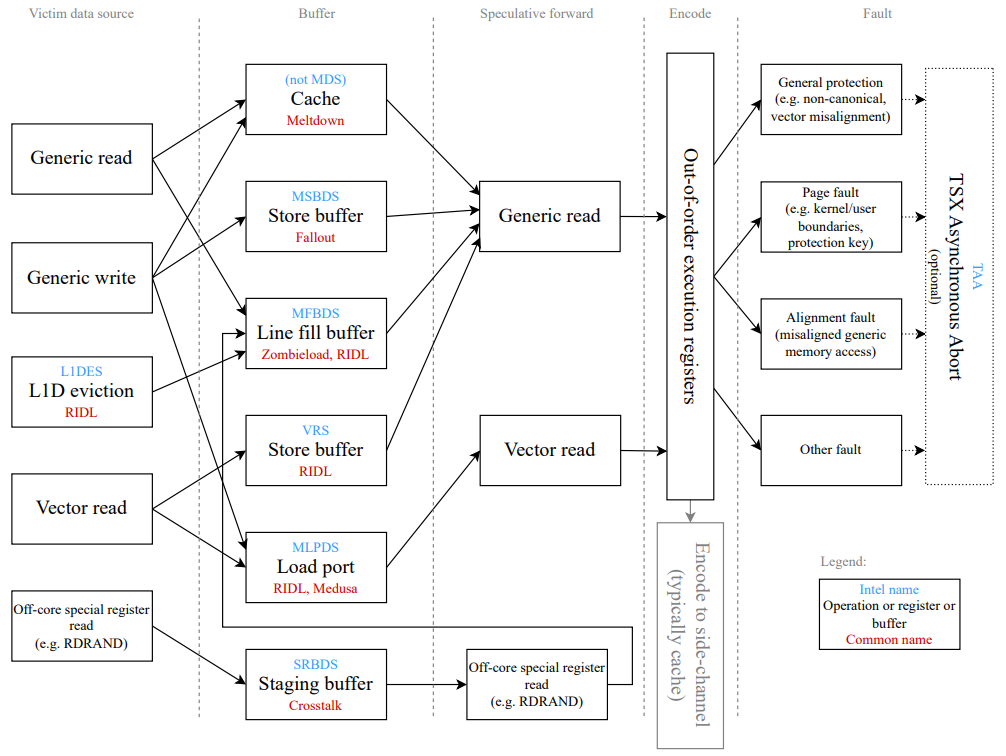

2.4.1 Basic MDS Variants. Figure 1 charts the major known MDS attack pathways on Intel CPUs and the names given to different variants by Intel and by the researchers who reported them. Most broadly, Intel categorizes MDS vulnerabilities in their CPUs by the specific buffer from which data is speculatively forwarded, since these buffers tend to be used for a number of different operations. RIDL MDS vulnerabilities can be categorized as Microarchitectural Load Port Data Sampling (MLPDS), for variants that leak from the CPU’s load port, and Microarchitectural Fill Buffer Data Sampling (MFBDS), for variants that leak from the CPU’s LFB. Along the same lines, Intel calls the Fallout vulnerability Microarchitectural Store Buffer Data Sampling (MSBDS), as it involves a leakage from the store buffer. Vector Register Sampling (VRS) is a variant of MSBDS that targets data that is handled by vector operations as it passes through the store buffer. VERW bypass exploits a bug in the

microcode fixes for MFBDS that loads stale and potentially secret data into the LFB. The basic mechanism of leakage is the same, and VERW bypass can be considered a special case of MFBDS. L1 Data Eviction Sampling (L1DES) is another special case of MFBDS, where data that is evicted from the L1 data cache passes through the LFB and becomes vulnerable to an MDS attack. Notably, L1DES is a case where the attacker can actually trigger the secret data’s presence in the CPU (by evicting it), whereas other MDS attacks rely directly on the victim process accessing the secret data to bring it into the right CPU buffer.

2.4.2 Medusa. Medusa [37] is a category of MDS attacks classified by Intel as MLPDS variants [25]. The Medusa vulnerabilities exploit the imperfect pattern-matching algorithms used to speculatively combine stores in the write-combine (WC) buffer of Intel processors. Intel considers the WC buffer to be part of the load port, so Intel categorizes this vulnerability as a case of MLPDS. There are three known Medusa variants which each exploit a different feature of the write-combine buffer to cause a speculative leakage:

Cache Indexing: a faulting load is speculatively combined with an earlier load with a matching cache line offset.

Unaligned Store-to-Load Forwarding: a valid store followed by a dependent load that triggers an misaligned memory fault causes random data from the WC to be forwarded.

Shadow REP MOV: a faulting REP MOV instruction followed by a dependent load leaks the data of a different REP MOV.

2.4.3 TSX Asynchronous Abort. The hardware vulnerability TSX Asynchronous Abort (TAA) [24] provides a different speculation mechanism for carrying out an MDS attack. While standard MDS attacks access restricted data with a standard speculated execution, TAA uses an atomic memory transaction as implemented by TSX. When an atomic memory transaction encounters an asynchronous abort, for example because another process reads a cache line marked for use by the transaction or because the transaction encounters a fault, all operations in the transaction are rolled back to the architectural state before the transaction started. However, during this rollback, instructions inside the transaction that have already started execution can continue speculative execution, as in steps (2) and (3) of other MDS attacks. TAA impacts all Intel processors that support TSX, and the case of certain newer processors that are not affected by other MDS attacks, MDS mitigations or TAAspecific mitigations (such as disabling TSX) must be implemented in software to protect against TAA [24].

2.4.4 Mitigations. Though Meltdown and MDS-class vulnerabilities exploit low level microarchitectural operations, they can be mitigated with microcode firmware patches on most vulnerable CPUs.

Page table isolation. Historically, kernel page tables have been included in user-level process page tables so that a user-level process can make a system call to the kernel with minimal overhead. Page table isolation (first proposed by Gruss et al. as KAISER [19]) maps only the bare minimum necessary kernel memory into the user page table and introduces a second page table only accessible by the kernel. With the user process unable to access the kernel page table, accesses to all but a small and specifically chosen fraction of kernel memory are stopped before they reach the lower level caches where a Meltdown attack begins.

Buffer overwrite. MDS attacks that target on-core CPU buffers require a lower-level and more targeted defense. Intel introduced a microcode update that overwrites vulnerable buffers when the first-level data (L1d) cache (a common target of cache timing sidechannel attacks) is flushed or the VERW instruction is run [25]. The kernel can then protect against MDS attacks by triggering a buffer overwrite when switching to an untrusted process.

The buffer overwrite mitigation targets MDS attacks at their source, but is imperfect to say the least. Processes remain vulnerable to attacks from concurrently running threads on the same core when SMT is enabled (since both threads share vulnerable buffers without the active process actually changing on either thread), Furthermore, shortly after the original buffer overwrite microcode update, the RIDL team found that on some Skylake CPUs, buffers were overwritten with stale and potentially sensitive data [50], and remained vulnerable even with mitigations enabled and SMT disabled. Still other processors are vulnerable to TAA but not nonTAA MDS attacks, and did not receive a buffer overwrite microcode update and as such require that TSX be disabled completely to prevent MDS attacks [20, 24].

2.5 Spectre

In 2018, Jan Horn and Paul Kocher [30] independently reported the first Spectre variants. Since then, many different Spectre variants [22, 30, 31, 33] and sub-variants [10, 13, 16, 28, 52] have been discovered. Spectre attacks make the CPU speculatively access memory that is architecturally inaccessible and leak the data into the architectural state. Therefore, all Spectre variants consist of three components [27]:

The first component is the Spectre gadget that is speculatively executed. Spectre variants are usually separated by the source of the misprediction they exploit. The outcome of a conditional direct branch, e.g., is predicted by the Pattern History Table (PHT). Mispredictions of the PHT can lead to a speculative bounds check bypass for load and store instructions [13, 28, 30]. The branch target of an indirect jump is predicted by the Branch Target Buffer (BTB). If an attacker can influence the result of a misprediction of the BTB, then speculative return-oriented programming attacks are possible [10, 13, 16, 30]. The same is true for predictions served by the Return Stack Buffer (RSB) that predicts return addresses during the execution of return instructions [13, 31, 33]. Recent results showed that some modern CPUs use the BTB for their return address predictions if the RSB underflows [52]. Another source of Spectre attacks is the prediction of store-to-load dependencies. If a load is mispredicted to not depend of a previous store, it speculatively executes on stale data which may lead to a speculative store bypass [22]. All of these gadgets are not exploitable by default but depend on the other two components discussed now.

The second component is how an attacker controls inputs to the aforementioned gadgets. Attackers may be able to define gadget input values directly through user input, file contents, network packets or other architectural mechanisms. On the other hand attackers may be able to inject data into the gadget transiently through load value injection [12] or floating point value injection [42]. Attackers are able to successfully control gadget inputs if they can influence which data or instructions are accessed or executed during the speculation window.

The third component is the covert channel that is used to transfer the speculative microarchitectural state into an architectural state and therefore exfiltrate the speculatively accessed data into a persistent environment. Cache covert channels [39, 40, 54] are applicable if the victim code performs a transient memory access depending on speculatively accessed secret data [30]. If a secret is accessed speculatively and loaded into an on-core buffer, an attacker can rely on an MDS-based channel [14, 46, 50] to transiently transfer the exfiltrated data to the attacker thread where the data is transferred to the architectural state through, e. g., a cache covert channel. Last but not least, if the victim executes code depending on secret data, the attacker can learn the secret by observing port contention [3, 11, 18, 43, 44].

2.5.1 Mitigations. Many countermeasures were developed to mitigate the various Spectre variants. A specific Spectre variant is effectively disabled if one of the three required components is removed. An attacker without control over inputs to Spectre gadgets is unlikely to successfully launch an attack. The same is true if a covert channel for transforming the speculative state into an architectural state is unavailable. But since this is usually hard to guarantee, Spectre countermeasures mainly focus on stopping mispredictions. Inserting lfence instructions before critical code sections disable speculative execution beyond this point and can therefore be used as a generic countermeasure. But because of its high performance overhead, more specific countermeasures were developed. Spectre-BTB countermeasures include Retpoline [48] and microcode updates like IBRS, STIBP, or IBPB [23]. Spectre-RSB and Spectre-BTB-via-RSB can be mitigated by filling the RSB with values to overwrite malicious entries and prevent the RSB from underflowing or by installing IBRS microcode updates. Spectre-STL can be mitigated by the SSBD microcode update [23]. Another drastic option to stop an attacker from tampering with shared branch prediction buffers is to disable SMT. Disabling SMT effectively partitions branch prediction hardware resources between concurrent tenants at the cost of a significant performance loss.

2.6 AWS’s isolation model

Firecracker is specifically built for serverless and container applications [1] and is currently used by AWS’ Fargate CaaS and Lambda FaaS. In both of these service models, Firecracker is the primary isolation system that supports every individual Fargate task or Lambda event. Both of these service models are also designed for running very high numbers of relatively small and short-lived tasks. AWS itemizes the design requirements for the isolation system that eventually became Firecracker as follows:

Isolation: It must be safe for multiple functions to run on the same hardware, protected against privilege escalation, information disclosure, covert channels, and other risks.

Overhead and Density: It must be possible to run thousands of functions on a single machine, with minimal waste.

Performance: Functions must perform similarly to running natively. Performance must also be consistent, and isolated from the behavior of neighbors on the same hardware.

Compatibility: Lambda allows functions to contain arbitrary Linux binaries and libraries. These must be supported without code changes or recompilation.

Fast Switching: It must be possible to start new functions and clean up old functions quickly.

Soft Allocation: It must be possible to over commit CPU, memory and other resources, with each function consuming only the resources it needs, not the resources it is entitled to. [1]

We are particularly interested in the isolation requirement and stress that microarchitectural attacks are declared in-scope for the Firecracker threat model. The “design” page in AWS’s public Firecracker Git repository elaborates on the isolation model and provides a useful diagram which we reproduce in Figure 2. This diagram pertains mostly to protection against privilege escalation. The outermost layer of protection is the jailer, which uses container isolation techniques to limit the Firecracker’s access to the host kernel while running the VMM and other management components

![Figure 2: AWS provides this threat containment diagram in a design document in the Firecracker GitHub repository [6]. In this model, the jailer provides container-like protections around Firecracker’s VMM, API, instance metadata service (IMDS), all of which run in the host user space, and the customer’s workload, which runs inside the virtual machine. The VM isolates the customer’s workload in the guest, ensuring that it only directly interacts with predetermined elements of the host (in both user and kernel space).](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-nw93zp7.png)

of Firecracker as threads of a single process in the host userspace. Within the the Firecracker process, the user’s workload is run on other threads. The workload threads execute the guest operating system of the virtual machine and any programs running in the guest. Running the user’s code in the virtual machine guest restricts its direct interaction with the host to prearranged interactions with KVM and certain portions of the Firecracker management threads. So from the perspective of the host kernel, the VMM and the VM including the user’s code are run in the same process. This is the reason why AWS states that each VM resides in a single process. But, since the VM is isolated via hardware virtualization techniques, the user’s code, the guest kernel, and the VMM operate in separate address spaces. Therefore, the guest’s code cannot architecturally or transiently access VMM or guest kernel memory addresses as they are not mapped in the guest’s address space. The remaining microarchitectural attack surface is limited to MDS attacks that leak information from CPU internal buffers ignoring address space boundaries and Spectre attacks where an attacker manipulates the branch prediction of other processes to self-leak information.

Not shown in Figure 2, but equally important to AWS’s threat model, is the isolation of functions from each other when hardware is shared, especially in light of the soft allocation requirement. Besides the fact that compromising the host kernel could compromise the security of any guests, microarchitectural attacks that target the host hardware can also threaten user code directly. Since a single Firecracker process contains all the necessary threads to run a virtual machine with a user’s function, soft allocation can simply be performed by the host operating system [1]. This means that standard Linux process isolation systems are in place on top of virtual machine isolation.

2.6.1 Firecracker security recommendations. The Firecracker documentation also recommends the following precautions for protecting against microarchitectural side-channels [8]:

• Disable SMT

• Enable kernel page-table isolation

• Disable kernel kame-page merging

• Use a kernel compiled with Spectre-BTB mitigation (e.g., IBRS and IBPB on x86)

• Verify Spectre-PHT mitigation

• Enable L1TF mitigation • Enable Spectre-STL mitigation

• Use memory with Rowhammer mitigation

• Disable swap or use secure swap

This paper is available on arxiv under CC BY-NC-ND 4.0 DEED license.

[1] The virtualized ring 0 and ring 3 are one of the core reasons why near-native code execution is achieved.